Software architecture is a high level structure for the development and implementation of software systems. As software becomes more and more prevalent and pervasive, different architectural styles are bound to evolve. In such a crowded street, microservices architecture has picked up a lot of traction and relevance. Let’s take a deep dive into understanding more about microservices, how they communicate via APIs, and how tools like Swagger and SwaggerHub can help.

What are Microservices?

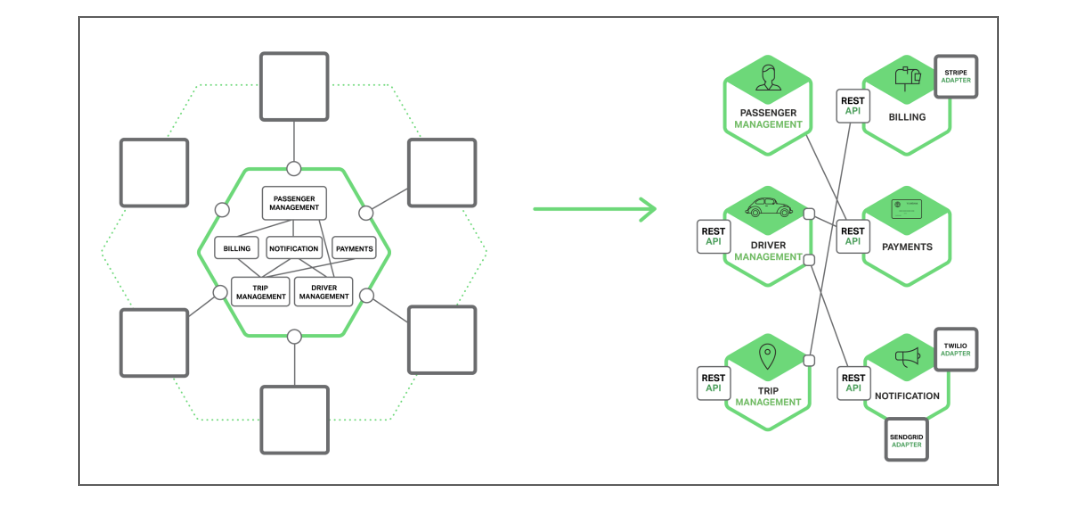

Microservices — also known as the microservice architecture — is an architectural style that structures an application as a collection of loosely coupled services, each of which implement business capabilities. The microservice architecture enables the continuous delivery and deployment of large, complex applications. It also enables an organization to evolve its technology stack, scale and be more resilient with time. Microservice architecture advocates for developing a single application into a collection of loosely associated services. These units also enable the continuous delivery and deployment of large, monolithic applications with minimal need for centralization.

(Image Source: NGINX Blog)

Why Microservices?

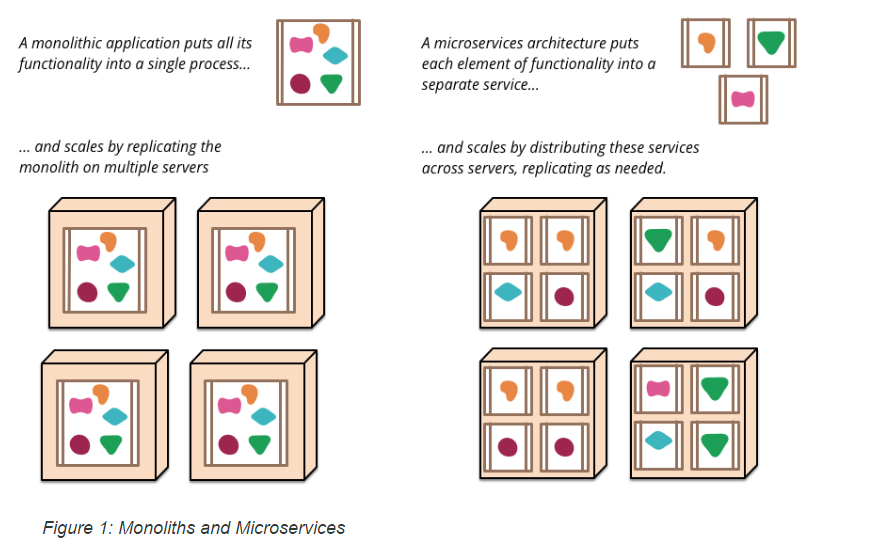

To understand the popularity of microservices, it’s important to understand monolithic applications. Any application will consist of a client side interface, a database for storing and handling data, and server side logic. A monolithic application is built as one single unit. In a monolithic application, server side logic is bundled together in the form of a monolith, with all the logic for handling requests running in a single process. All updates to this system will entail deploying a new version of the server side application. While there are, and have been thousands of successful monolithic-based applications in the market, development teams are starting to feel the brunt of such large scale deployment operations. For example, in a monolithic application, any small modification is tied to an entire change cycle, and these cycles are invariably tied to one another. Granular scaling of specific functions and modules is also not possible, as teams have to scale an entire application.

(Image Source: martinfowler.com)

In order to overcome the growing complexity of managing, updating and deploying applications, particularly on the cloud, the adoption of the microservices architecture took off. Each microservice defines a specific business function, and only defines operations that pertain to this function, making them very lightweight and portable. Microservices can be deployed and run in smaller hosts, and don’t have to use the RAM and CPU resources of traditional architecture. These operations can also be developed in different languages depending on the use case, so a message-board service can be built in Ruby, and the service delivering them to different users can be built on Node. All of the above have contributed to the massive adoption of microservice-based architecture, the most prominent example being Netflix.

Is this SOA?

Logically separating functional units within an application is not a new concept. The most common form of comparison is between microservices and Service Oriented Architecture (SOA). I won’t go too deep on this, but suffice to say that microservices and SOA differ in quite a few areas. SOA works on an interface level, aiming to expose functions as service interfaces. These interfaces make it easier to use their data and operations in the next generation of applications. The scope of SOA is around the standardization of interfaces between applications, while microservices have an application level scope.

Drawbacks of Microservices

As with any architectural style, microservices can have a few disadvantages. One big issue is the decomposition of the application’s entire business capabilities into multiple granular units, where drawing the line around sub-business units can be hard and tricky. Poorly defined boundaries can have a negative impact on scaling applications. It can also be hard to reuse code across different services, especially when built on different languages. It can be tedious to keep track of the hosts running various services, and can lead to a lot of confusion and expenditure of resources trying to orchestrate these various components together. Microservice architecture also require technical skills to effectively pull off, and is a big transition for developers accustomed to traditional forms of application engineering.

How Containers Help?

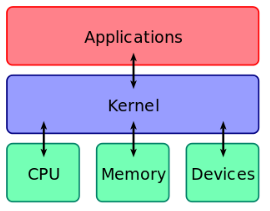

The adoption of the microservices architecture led to a need in overcoming some of its drawbacks. This fueled the rise in the container ecosystem. A container is a form of operating service virtualization, which allows various services to run in resource-independent units. Linux containers make use of the kernel interface. A kernel is the core computer program for an operating system, and is the first program that loads on startup.

(Image Source: wikipedia)

A container’s configuration allows multiple containers to share the same kernel, while running simultaneously, all independent of each other. The OS virtualization makes the transportation and deployment of code much more efficient, even more than traditional VMs, which only virtualize the hardware. Docker is the most popular container technology, and has become the standard Linux Kernel-based container used across thousands of applications in a very small amount of time. The independence between containers of the same host make deploying microservices built on different technologies and frameworks very easy and straightforward. The portability and lightweight of containers are also nice-to-haves for microservice deployment. To solve the issue of microservice management, various container management systems have emerged to better and manage and orchestrate these containers together, as well as configure and administer the entire cluster server. Here’s a great post comparing a few popular ones.

The Role of APIs

We know microservices are independently maintained components that make up a larger application. We also know containers are a great way to deploy them. Microservices and containers use an inter‑process communication (IPC) mechanism to interact with each other. These interactions could be:

- One to one, where client requests are handled by one service

- One to many, where client requests are handled by multiple services

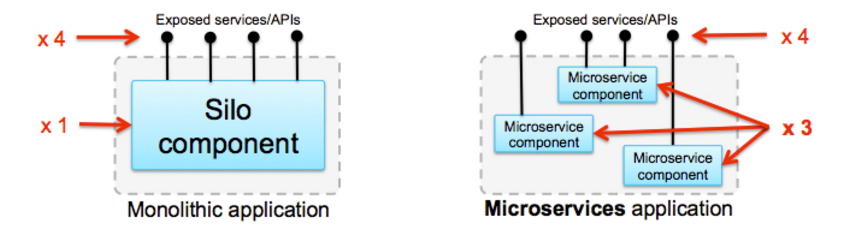

If the IPC mechanism involves a request-response cycle, then the most common means of communication are RESTful, which almost always use HTTP/JSON APIs. APIs used as IPCs between microservices are different from traditional forms. These APIs are more granular. Since APIs exposing microservices expose fine grained data, clients may have to follow a one-to-many form of interactions between services to obtain the required data.

(Image Source: IBM developerWorks)

For example, say you’re building an e-commerce website following the microservices pattern. The complete picture of a product being sold could be painted via multiple services, like a service to expose basic product info like: title and description, a service to expose the price, a service to export the reviews, and so on. A client wishing to obtain the full data of the product would have to now call all of these services. In the multi-device ecosystem, different devices could need different data, like a desktop application could potentially need more elaborate data than a mobile version.

Design First Approach to Microservices

We’ve already discussed how APIs have become the most common way for various services to communicate with each other. In order to better expose services via APIs, a common interface for these APIs needs to be present to tell exactly what each service is supposed to do. This interface is a contract that defines the SLA between the client and the services. The OpenAPI (Swagger) Specification has emerged as the standard format for defining this contract, in a way that’s both human and machine readable, making it easier for services to effectively communicate and orchestrates the entire application. Microservice architecture is predominantly an enterprise-grade motion, and as such, it’s important to give APIs exposing the microservice data a first class treatment. Many organizations have adopted a Design First approach to building microservices, which involves designing and defining the interface of the microservice first, reviewing and stabilizing this contract, and only then implementing the service. The Design First approach ensures that your services are compliant with the requirements of the client upfront, before any actual development has taken place. Here are some key considerations for adopting a Design First (or API First) approach to APIs:

Speed to Market

By their very nature, microservices are internal, and are meant to have minimal compute power and time of use. The same applies to the APIs that expose them. As such, it’s important to automate the design, documentation and development of these API to achieve speedy API delivery rates. The Swagger interface not just defines the common SLA between clients and services, it allows for generating server and client templates, as well as test cases, making development and testing a much easier process. Professional tools like SwaggerHub, which offers a smart API editor for intelligent design, and Domains to quicken API design delivery, can help achieve quick go-to market speeds for your APIs.

Flexible Orchestration

We spoke about how APIs exposing microservices are granular and fine grained, meaning client apps needing data for a specific entity could invariably requires calling multiple APIs. Complexity in handling data exchange for microservice-based applications is a huge issue. The solution to handle multiple service requests is to implement an API gateway, which can process requests through a single entry point. Since every API on the gateway’s base API contract is defined in the OpenAPI/Swagger Specification, efficiently moving from the design to the deployment phase via an API gateway becomes very easy. SwaggerHub’s direct integrations with API gateways like AWS, Azure, and IBM API Connect are engineered for organizations who want to efficiently orchestrate these requests.

Responsible Design

Multiple teams building different services can be tricky. Not only is managing your human resources difficult, it can get even more complicated when these teams start developing the service’s interface. Divergence of design patterns in the URL structure, response headers and request-response cycle objects, when multiple teams are defining and building RESTful interfaces is a very common occurrence. Since the interface defines how clients and services interact, having differences in designs leads to confusion and overhead during the development phase of your services. Repairing this will only lead to expenditure of resources, in the form of time, money and human capital. This can be overcome using two of SwaggerHub’s core design features: Domains and Style Validator. The Style Validator ensures your RESTful interface adheres to a standard blueprint based on your organizational requirements. This could mean ensuring all interfaces have camelcase operators, examples defined in their response packets, or have their models defined locally. In Domains, you can store common, reusable syntax, be it models or request-response objects, that can be quickly referenced across multiple APIs that expose your microservice business capabilities. This allows you to have a more fine-grained approach to your API styles, having one control center for all of your RESTful designs that expose your services. Domains and Style Validator are unique to SwaggerHub, and ensure design consistency and quick time-to-market when building your service IPCs.

Webinar: Lessons in Transforming the Enterprise to an API Platform

Are your digital transformation efforts taking your business in the right direction? On April 10, we are hosting a free webinar: Lessons in Transforming the Enterprise to an API Platform. This session focuses on lessons learned from working with various organizations in hospitality, loan origination, and fintech to develop and deploy their API platform. These enterprises implemented an API first design, federated governance, and API management layers as part of their overall platform strategy. We will explore what worked, what didn't work, and tips to ease your transformation initiatives. Topics that will be discussed:

- Developing an effective API program that promotes internal reuse and a thriving partner program

- Techniques for implementing API governance, including an exploration of centralized vs. federated governance

- How microservices and modular software design are changing the culture of today's enterprises

- Increasing API onboarding and adoption by developing a great portal and developer support process

- Tips for accelerating your transformation initiatives with an API-centric approach to the enterprise

Register Now